|

Qore BulkSqlUtil Module Reference

1.3

|

|

Qore BulkSqlUtil Module Reference

1.3

|

base class for bulk DML operations More...

Public Member Methods | |

| nothing | commit () |

| flushes any queued data and commits the transaction | |

| constructor (string name, SqlUtil::AbstractTable target, *hash opts) | |

| creates the object from the supplied arguments More... | |

| constructor (string name, SqlUtil::Table target, *hash opts) | |

| creates the object from the supplied arguments More... | |

| destructor () | |

| throws an exception if there is data pending in the internal row data cache; make sure to call flush() or discard() before destroying the object More... | |

| discard () | |

| discards any buffered batched data; this method should be called before destroying the object if an error occurs More... | |

| flush () | |

| flushes any remaining batched data to the database; this method should always be called before committing the transaction or destroying the object More... | |

| Qore::SQL::AbstractDatasource | getDatasource () |

| returns the AbstractDatasource object associated with this object | |

| int | getRowCount () |

| returns the affected row count | |

| SqlUtil::AbstractTable | getTable () |

| returns the underlying SqlUtil::AbstractTable object | |

| string | getTableName () |

| returns the table name | |

| queueData (hash data) | |

queues row data in the block buffer; the block buffer is flushed to the DB if the buffer size reaches the limit defined by the block_size option; does not commit the transaction More... | |

| queueData (list l) | |

queues row data in the block buffer; the block buffer is flushed to the DB if the buffer size reaches the limit defined by the block_size option; does not commit the transaction More... | |

| nothing | rollback () |

| discards any queued data and rolls back the transaction | |

| int | size () |

| returns the current size of the cache as a number of rows More... | |

Public Attributes | |

| const | OptionDefaults = ... |

| default option values | |

| const | OptionKeys = ... |

| option keys for this object | |

Private Member Methods | |

| abstract | flushImpl () |

| flushes queued data to the database | |

| flushIntern () | |

| flushes queued data to the database | |

| init (*hash opts) | |

| common constructor initialization | |

| setupInitialRow (hash row) | |

| sets up the block buffer given the initial template row for inserting | |

| setupInitialRowColumns (hash row) | |

| sets up the block buffer given the initial template hash of lists for inserting | |

Private Attributes | |

| softint | block_size |

| bulk operation block size | |

| hash | cval |

| "constant" row values; must be equal in all calls to queueData | |

| list | cval_keys |

| "constant" row value keys | |

| hash | hbuf |

| buffer for bulk operations | |

| *code | info_log |

| an optional info logging callback; must accept a sprintf()-style format specifier and optional arguments | |

| string | opname |

| operation name | |

| list | ret_args = () |

| list of "returning" columns | |

| int | row_count = 0 |

| row count | |

| SqlUtil::AbstractTable | table |

| the target table object | |

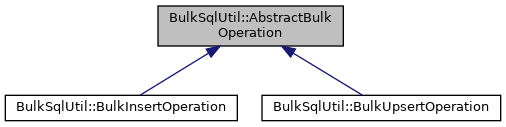

base class for bulk DML operations

This is an abstract base class for bulk DML operations; this class provides the majority of the API support for bulk DML operations for the concrete child classes that inherit it.

block_size rows have been queued.| BulkSqlUtil::AbstractBulkOperation::constructor | ( | string | name, |

| SqlUtil::AbstractTable | target, | ||

| *hash | opts | ||

| ) |

creates the object from the supplied arguments

| name | the name of the operation |

| target | the target table object |

| opts | an optional hash of options for the object as follows:

|

| BulkSqlUtil::AbstractBulkOperation::constructor | ( | string | name, |

| SqlUtil::Table | target, | ||

| *hash | opts | ||

| ) |

creates the object from the supplied arguments

| name | the name of the operation |

| target | the target table object |

| opts | an optional hash of options for the object as follows:

|

| BulkSqlUtil::AbstractBulkOperation::destructor | ( | ) |

| BulkSqlUtil::AbstractBulkOperation::discard | ( | ) |

discards any buffered batched data; this method should be called before destroying the object if an error occurs

| BulkSqlUtil::AbstractBulkOperation::flush | ( | ) |

flushes any remaining batched data to the database; this method should always be called before committing the transaction or destroying the object

| BulkSqlUtil::AbstractBulkOperation::queueData | ( | hash | data | ) |

queues row data in the block buffer; the block buffer is flushed to the DB if the buffer size reaches the limit defined by the block_size option; does not commit the transaction

| data | the input record or record set in case a hash of lists is passed; each hash represents a row (keys are column names and values are column values); when inserting, SQL Insert Operator Functions can also be used. If at least one hash value is a list, then any non-hash (indicating an insert opertor hash) and non-list values will be assumed to be constant values for every row and therefore future calls of this method (and overloaded variants) will ignore any values given for such keys and use the values given in the first call. |

| BulkSqlUtil::AbstractBulkOperation::queueData | ( | list | l | ) |

queues row data in the block buffer; the block buffer is flushed to the DB if the buffer size reaches the limit defined by the block_size option; does not commit the transaction

| l | a list of hashes representing the input row data; each hash represents a row (keys are column names and values are column values); when inserting, SQL Insert Operator Functions can also be used |

| int BulkSqlUtil::AbstractBulkOperation::size | ( | ) |

returns the current size of the cache as a number of rows